Async programming (with C#)

In the world of programming you noticed that term “async programming” is very frequent, and most often then not someone will ask you (or tell you) to do that particular task in “asynchronous way”. But what that even means?

Before mentioning any concrete programming language (like C# or JavaScript), let’s try to understand what asynchronous programming is in a broader way.

To come closer to understanding, let’s see what async programming isn’t.

Parallel programming

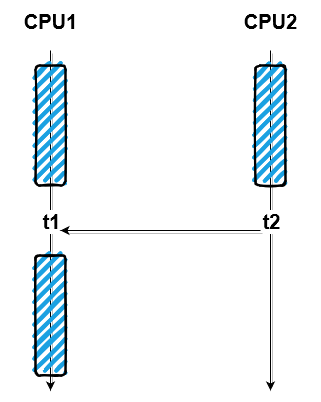

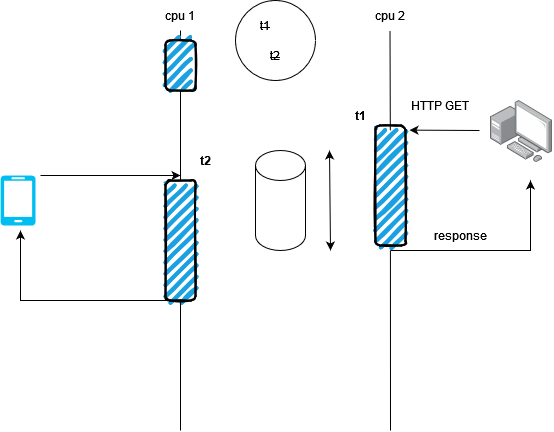

In parallel programming you are performing (or rather the code you write) multiple calculations at the same point in time. This is usually some CPU-bound stuff you want to do “in parallel”. For instance, let’s say you have an image and you want to apply some filter to it or do some kind of transformation to it. You could go around and say “I want to the transformation for first half of the image (pixel this to pixel that) on CPU1, and the other part of the picture I want to be processed on CPU2”. And right there we have an important distinction between parallel and async programming, for parallel programming you need to have multiple processors (or multiple CPU cores), and for async programming you don’t need to.

In the diagram above we have an illustration of parallel programming. We have two threads (running on each of the CPUs), thread t1 can perform above mention image transformation (first part of the image), and t2 as well for the other part, and this is done “in parallel”. At some point our code needs to handle the “assembling of the parts”, but let’s not dwell too much on that here.

CPU blocking

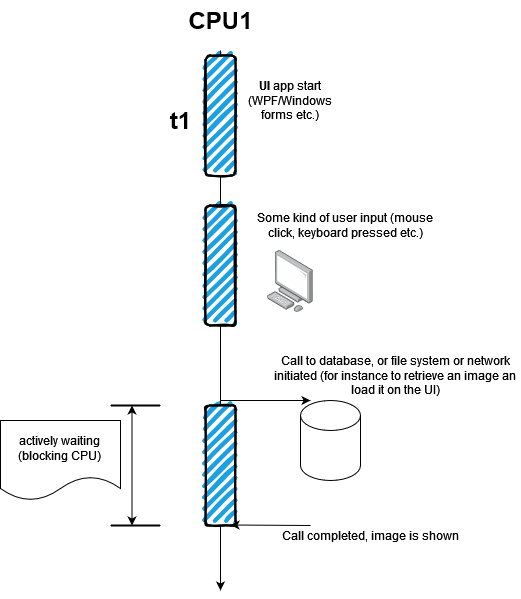

Let’s now imagine that we have a single core CPU, and that we have a regular desktop app.

We are implementing some kind of UI application. If we start certain program, after the initialization the app is waiting for some kind of user input, so it is idle. At some point in time, user for ex. moves the mouse, app becomes active, app handles mouse movement or keyboard input, and goes idle again.

At some point in time, application decides to go and grab something from the disk, or over the network, or fetch something from the database. That call to the database, or disk, or network, takes some time to complete. If we do not use async programming our thread will actively wait blocking the CPU. As if you were staring at the watch and waiting for something to complete, doing nothing.

So our thread is doing nothing until the database call is complete.

Since our app also have the user interface (which is also a thread), application will not react to mouse moves for instance. Application will be frozen for the entire time. This is not a good idea as you can see.

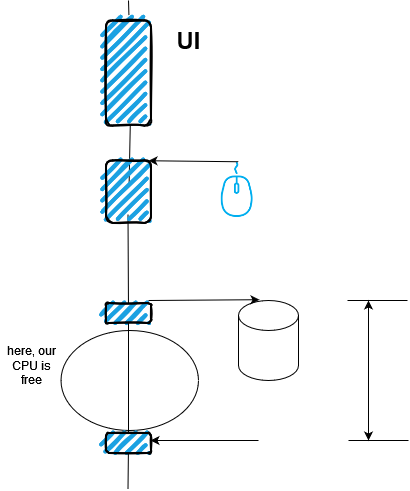

How we can fix this? Well, we can run a little bit of code which issues a db call.

Now, we are using async processing, letting the database do it's work in the background. Something else is busy, not the CPU.

Now, when the time is elapsed, we will be informed, actively. So, in one point in time database will tell us that it is finished, and that will trigger another piece of asynchronous code, where we can do the processing the results of database query.

The important thing is that in the meantime, our CPU, our UI thread, is free.

Web server

Now let’s imagine that we are building a C# app that has a web server built-in (Kestrel) and that we are running a single process. Now what about threads? How many threads will our web server create? Well, it turns out, at least it’s a case for a C#, these web servers have a pool of threads and the number of these threads in the thread pool are equal to number of CPU cores. Why just two you might ask? Why not 100? Well, it’s because turns out threads take a lot of memory, so they are not so cheap to create, and furthermore, it takes some time to create them.

This thread pool that we mentioned, have a limit (configurable), a number of threads a machine can handle, because thread pool cannot and should not grow indefinitely. It can outnumber the number of available cpu cores, but typically you try to keep the number as low as possible, in order to keep your web server efficient, otherwise you will be consuming a lot of memory.

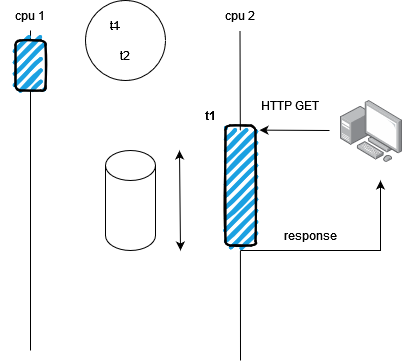

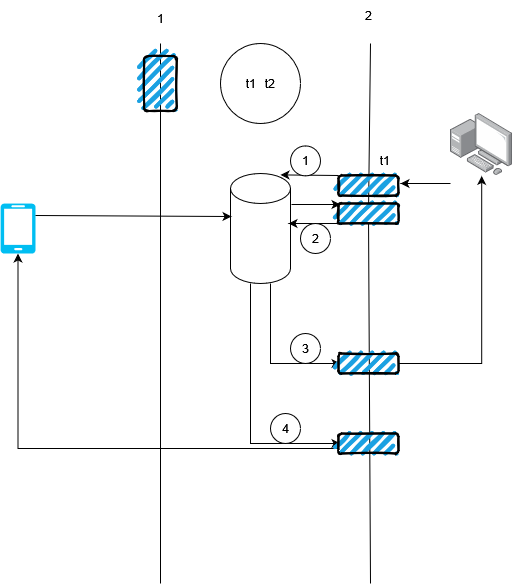

Let’s take a look at the diagram below. Image we have a cpu with two cores and our above imagined web server. We have two threads in the thread pool. Each HTTP request is assigned an idle thread in the thread pool.

Now our t1 thread is executing on cpu2, it needs some time to process the request, and let’s now imagine it also needs to say query the db for some additional information. If we don’t do async programming our thread will be blocked for quite some time and the end we are sending the result (response) back to the client.

The problem is that we don’t have just one client, we have multiple clients.

Imagine for example that we have a client on the phone, that issues another HTTP request. We now have t2 available in the thread pool .

And again some time to process the request, request some additional data from the db etc.

Now we have a problem. What if we have 3rd and 4th and so on, HTTP request, or 100s of HTTP requests. What the system will do in the background, it will try to create additional threads (t3, t4, t5…) and will try to schedule those threads on the CPUs, but we have only limited number of CPUs (2 in our example), so these threads are waiting and waiting, they are idle. They are just blocking the CPU.

Async programming to the rescue

Let’s now see how asynchronous programming is solving these issues. We have the same scenario as above. HTTP GET comes in, and thread t1 in processing the request, but when the database call is issued (to retrieve additional info), we hand back the thread t1 to the thread pool.

So now, when our second user with the phone is issuing the request, it can go again to thread t1 and use t1. Again performing a little bit of processing of the incoming web request and accessing the database. Then the result of the first db call is processed and returned to the second client.

And if you take a look now, we only need a single thread and we can process multiple HTTP requests at the same time. We are not doing parallel programming here, we are doing asynchronous programming. The important takeaway is that you are always using asynchronous programming when you are doing some kind of non-CPU bound work (accessing the database, file system, network, etc.)

If you program like this, your web server will really be efficient and it will scale really well. They can handle much more requests, than if you do locked programming.

In web server development, you don't need to do parallel programming on your own, it's done by the web server, as far as for ex. .NET Core goes (Kestrel).

NodeJS is however different, as JavaScript is single threaded.

This is the reason why in the cloud so many web server have single thread, because if you need more threads, you spin up additional servers.

Docker containers, for instance, are often single threaded. From the point of view of a single server, there is no multi threading, but from overall view there is multi threading, because you have multiple servers.

Note: I tried to summarize in a written form this excellent lecture about async programming by Rainer Stropek